Into the Wild with AudioScope: Unsupervised Audio-Visual Separation of On-Screen Sounds

| Efthymios Tzinis1,2 | Scott Wisdom1 | Aren Jansen1 | Shawn Hershey1 | Tal Remez1 | Daniel P.W. Ellis1 | John R. Hershey1 |

| 1Google Research |

| 2University of Illinois at Urbana-Champaign |

(Resized still with or without overlaid attention map from "Whitethroat" by S. Rae, license: CC BY 2.0.)

Abstract

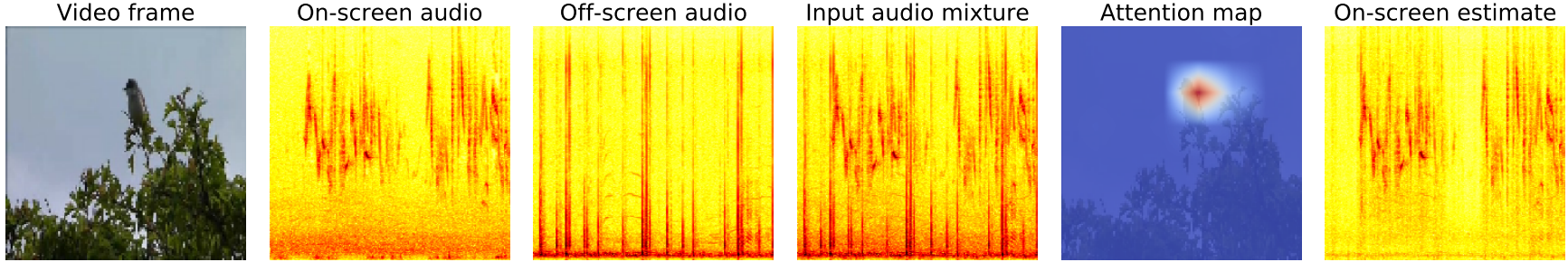

Recent progress in deep learning has enabled many advances in sound separation and visual scene understanding. However, extracting sound sources which are apparent in natural videos remains an open problem. In this work, we present AudioScope, a novel audio-visual sound separation framework that can be trained without supervision to isolate on-screen sound sources from real in-the-wild videos. Prior audio-visual separation work assumed artificial limitations on the domain of sound classes (e.g., to speech or music), constrained the number of sources, and required strong sound separation or visual segmentation labels. AudioScope overcomes these limitations, operating on an open domain of sounds, with variable numbers of sources, and without labels or prior visual segmentation. The training procedure for AudioScope uses mixture invariant training (MixIT) to separate synthetic mixtures of mixtures (MoMs) into individual sources, where noisy labels for mixtures are provided by an unsupervised audio-visual coincidence model. Using the noisy labels, along with attention between video and audio features, AudioScope learns to identify audio-visual similarity and to suppress off-screen sounds. We demonstrate the effectiveness of our approach using a dataset of video clips extracted from open-domain YFCC100m video data. This dataset contains a wide diversity of sound classes recorded in unconstrained conditions, making the application of previous methods unsuitable. For evaluation and semi-supervised experiments, we collected human labels for presence of on-screen and off-screen sounds on a small subset of clips.

Paper

"Into the Wild with AudioScope: Unsupervised Audio-Visual Separation of On-Screen Sounds", |

Audio-Visual Demos

| [Demo index] |

Dataset Recipe

| [Github] |

Last updated: May 2021